Average movies are rated highly

Statistical patterns on IMDb, RT, and Metacritic

This article uses data from IMDb and OMDb to show a tendency in the critical discernment of cineasts.

Amazon-owned IMDb is a commercial operation now dominated by the marketing of new movies and US TV. It’s mostly pictures of celebrities. Even so, it remains an “Internet Movie Database”, on the web since 1993.

Among other data, IMDb publishes a rating of each entry’s quality. On the face of it, such ratings would be most useful to prospective moviegoers if they were linearly distributed on the scale, which is 1 to 10. In a linear distribution, the 10% of works that are least well liked would be rated 1: A clear signal to consider other options at the theatre. As shown in this article, the 10% of works that are least well liked actually take up the entire bottom half of the scale (1–5) on IMDb.

IMDb ratings are based on individual ratings submitted by tens of millions of registered users. Two rivals, Rotten Tomatoes (RT, 1998) and Metacritic (2001), instead report only on the opinions of certain critics; generally professionals. None of the three review aggregators process their data in such a way as to produce the linear scale of my example. Nor do I.

In this article, I present some statistical descriptions of ratings on IMDb, RT and Metacritic. I do this to demonstrate how non-linear they are, and therefore what is indicated by a difference between any two ratings.

Contents

About the data set

Data in this article was collected in the summer and autumn of 2021, from two sources. The first of those is OMDb, the “Open Movie Database”, a headless inheritor of the originally noncommercial IMDb. I have been a financial supporter of OMDb since 2017. The service collects data from IMDb, RT and Metacritic, among other sources.

I do not have direct access to OMDb data, so I can only retrieve it one entry at a time, under a daily cap, which took months. Many of those requests failed for a variety of reasons, including missing data on the server side. The total number of non-error responses across the range of valid identifiers was 4,895,043.

To fill gaps in the OMDb data, I downloaded public TSV files (basics and ratings) directly from IMDb on 2021-11-13. Combining these with the OMDb set, I had some non-error data on 7,446,290 unique IMDb-indexed feature films, TV series, and episodes of series. Only 1,205,254 have an IMDb rating.

The data set includes some information other than ratings, such as the year of release. Owing to lax editorial policy, some of that data is bad. TV series are mostly assigned a runtime per episode, but many have a more useful total runtime instead, which can reach thousands of minutes. I have not attempted to clean that up, so please view all references to runtime as suspect.

RT and Metacritic both present an “audience” or “user” rating as well as a critics’ rating. However, only the critics’ rating on each of the two sites is available in the data set. The data does not include unweighted averages of user votes, nor any individual reviews or critics.

Scales

There are many reasons why ratings are not linearly distributed, but a desire to aggregate by the simplest measure, the arithmetic mean, is apparently not one of those reasons. User ratings on IMDb are filtered through an algorithm to produce the “IMDb rating”, a weighted average. RT’s percentage rating (the “Tomatometer”) is not weighted. Metacritic’s “Metascore” is weighted by critic “stature” (social status).

IMDb does not publish its algorithm for weighting user ratings, partly because it is intended as a countermeasure against abuse, that is brigading, “ballot stuffing” and other dishonest voting practices. Metacritic does not publish its weights either. Because the unweighted averages are not available, I cannot judge the effects.

The midpoint of IMDb’s rating scale is 5.5, that is the mean of 1 and 10. RT uses a scale of 0 to 100, for a midpoint of 50. Metacritic uses 1 to 100, for a midpoint of 50.5. I have not attempted to reframe their data to a unified scale.

Glossary

In this article, I am using the symbol “N” for the sample size of ratings in each category of the data, and “CV” for the coefficient of variation.

Correlations, where they appear, are Pearson correlation coefficients, which are commutative.

Analysis

General description

The following table shows common statistical descriptors of all ratings in the data.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 1205254 | 6.92 | 5.1 | 6.2 | 7.1 | 7.9 | 8.5 | 20.3% |

| RT | 57467 | 55.65 | 17 | 33 | 57 | 80 | 91 | 49.0% |

| Metacritic | 15353 | 57.73 | 34 | 46 | 59 | 71 | 80 | 30.3% |

The table shows that IMDb ratings, though they are published for only a fraction of IMDb entries, still present much larger sample sizes than the other two aggregators that appear on OMDb.

With regard to a linear distribution, it is clearly Rotten Tomatoes that best approximates this ideal. Relative to each site’s scale, RT shows the most centered mean, the most centered median (50th percentile), and the greatest variability relative to its mean, as measured by CV. However, even RT’s mean and median are both above the midpoint of its scale (50).

The most salient data point in the table is the median IMDb rating at 7.1, 1.6 points above the midpoint of the scale. The data set includes numbers of votes per entry on IMDb, but I have not weighted any of the percentiles by that information. 7.1 is simply the median rating of all movies etc. for which IMDb presents an IMDb rating at all in its TSV export.

Correlations between site ratings

The following table describes the statistical correlation between ratings in each pair of the three sites under review.

| Pair of sources | Correlation |

|---|---|

| IMDb against RT | 67.7% |

| IMDb against Metacritic | 68.6% |

| RT against Metacritic | 87.1% |

IMDb’s lower correlation with the other two sites, as compared to the correlation between RT and Metacritic, can be attributed to its lower variability. That variability, in turn, is primarily the result of a larger sample size, i.e. more people’s opinions. There is no reason, from this data alone, to assume a difference in taste.

Correlations with quantitative properties

| Year of release | Box office | Vote count on IMDb | Runtime ⚠️ | |

|---|---|---|---|---|

| IMDb | 15.3% | 11.0% | 1.2% | -8.7% |

| RT | -4.5% | 2.0% | 10.4% | 11.4% |

| Metacritic | -12.3% | 1.8% | 13.6% | 14.2% |

IMDb displays a preference for recent, higher-earning and shorter works, while the other two sites seem notably disinterested in profitability and display positive correlations with runtime, meaning they rate longer works more favourably. Metacritic is especially fond of older works.

As noted, the full data set is flawed for runtime. When the data is restricted to movies only, so that the runtime problem is less severe, the correlation between IMDb rating and runtime regresses to -5.3%. Rating–runtime correlations for the other two sites differ less (up to 0.2%).

By type

Movies versus series

I have simplified IMDb’s typology as noted in each caption. Please note that runtime data was not used for the new typology, so “movies” includes shorts and other IMDb TSV categories named in the following captions.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 544108 | 6.43 | 4.6 | 5.6 | 6.5 | 7.4 | 8.2 | 22.1% |

| RT | 56922 | 55.59 | 17 | 33 | 57 | 80 | 91 | 49.0% |

| Metacritic | 15345 | 57.73 | 34 | 46 | 59 | 71 | 80 | 30.3% |

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 90585 | 6.83 | 4.8 | 6.1 | 7.1 | 7.9 | 8.4 | 21.4% |

| RT | 339 | 65.82 | 30 | 50 | 69 | 86 | 93 | 36.5% |

| Metacritic | 0 | — | — | — | — | — | — | — |

Series are rated higher than movies. Episodes are more mixed, with even smaller non-IMDb sample sizes, but they are rated even higher than series are on IMDb.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 570561 | 7.40 | 6.0 | 6.8 | 7.6 | 8.1 | 8.7 | 16.2% |

| RT | 206 | 53.09 | 4 | 29 | 59 | 78 | 92 | 57.4% |

| Metacritic | 8 | 65.75 | 58 | 59 | 60 | 72 | 78 | 15.4% |

The sample size of episodes on IMDb is higher than that of movies. This is part of what skews the overall median, but even movies alone are one full point above the middle of the scale.

By period: Movies only

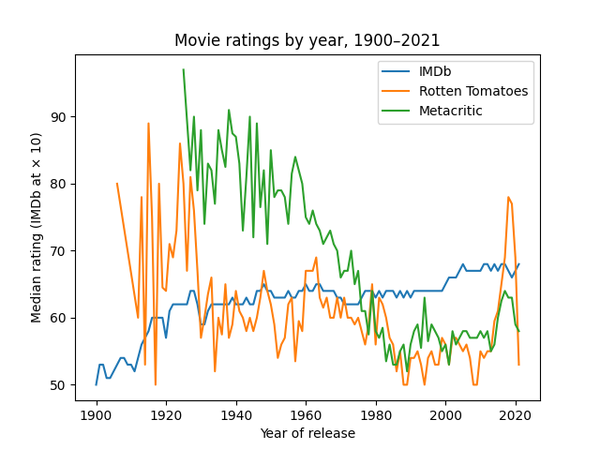

Colourful lines for visual interest.

The rise in ratings across the last decade, on IMDb, is even more pronounced when series and episodes are included.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 482438 | 7.09 | 5.2 | 6.3 | 7.3 | 8.0 | 8.7 | 20.1% |

| RT | 16777 | 57.26 | 17 | 33 | 60 | 82 | 93 | 49.2% |

| Metacritic | 6359 | 58.13 | 34 | 47 | 60 | 71 | 79 | 29.4% |

A 7.3 median (of weighted ratings) for all content on IMDb in the 2010s. That is high.

Adult entertainment

The following table describes those titles for which isAdult is true in IMDb TSV, or “Adult” appears in the list of genres.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 20573 | 6.30 | 4.7 | 5.5 | 6.3 | 7.1 | 7.8 | 20.3% |

| RT | 195 | 48.77 | 14 | 28 | 49 | 68 | 84 | 52.6% |

| Metacritic | 3 | 47.33 | 38 | 39 | 41 | 52 | 59 | 30.8% |

This is the most readily available subset of the data where ratings are clearly depressed, but even here, where RT and Metacritic both dip below their midpoints, IMDb remains well above its midpoint.

Awards

OMDb includes a text field for awards and nominations. The following table limits the selection to entries for which this field is populated at all, that is every entry that is documented as having been nominated for or won any award.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 118984 | 6.69 | 5.3 | 6.1 | 6.8 | 7.4 | 8.0 | 16.8% |

| RT | 30652 | 63.55 | 25 | 45 | 67 | 85 | 94 | 39.9% |

| Metacritic | 12617 | 60.09 | 37 | 49 | 62 | 72 | 81 | 28.1% |

As with adult entertainment, IMDb ratings dip closer to the midpoint, but here, RT and Metacritic both jump up by several percentage points.

Country of production

The Country field in OMDb data is dubious. It often mentions multiple countries with no obvious regard for where filming was done, whence it was financed, and where creative control rested. That said, there are notable differences in ratings.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 262850 | 6.76 | 4.8 | 5.9 | 7.0 | 7.8 | 8.4 | 20.9% |

| RT | 27774 | 51.70 | 15 | 29 | 50 | 75 | 89 | 52.9% |

| Metacritic | 7254 | 55.29 | 31 | 43 | 56 | 68 | 78 | 32.3% |

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 792568 | 6.92 | 5.1 | 6.2 | 7.1 | 7.9 | 8.5 | 19.9% |

| RT | 29693 | 59.34 | 20 | 39 | 62 | 82 | 92 | 45.0% |

| Metacritic | 8099 | 59.92 | 36 | 49 | 62 | 72 | 81 | 28.2% |

For reference, the median IMDb rating of productions from my native Sweden is 6.4 (below average, unlike general non-US productions), with median Metacritic and RT ratings both at 69 (above average, and more so than general non-US productions).

Money

IMDb lives off hype, but do people like commercial successes best? They do on IMDb. See above for correlations between ratings and box office. Here follows some extra detail.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 8791 | 6.38 | 5.0 | 5.8 | 6.5 | 7.1 | 7.6 | 16.6% |

| RT | 7377 | 63.35 | 24 | 44 | 68 | 85 | 94 | 41.1% |

| Metacritic | 5065 | 60.22 | 38 | 50 | 62 | 72 | 80 | 26.6% |

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 687 | 6.91 | 5.8 | 6.4 | 6.9 | 7.6 | 8.0 | 12.6% |

| RT | 673 | 66.48 | 31 | 50 | 71 | 86 | 93 | 34.6% |

| Metacritic | 686 | 61.52 | 41 | 50 | 62 | 72 | 83 | 25.2% |

As a backdrop to this, the median IMDb rating for entries without any data on box-office takings is 7.1, as in the full data set, which is not surprising; the sample size (1,188,392) is a strong majority of all rated titles. Box-office data exists on only 16,862 entries, and the median IMDb rating on (all of) those is low at 6.5, the same as the sub-$1M subset.

Vote count

Computer scientist Daniel M. German once observed that IMDb ratings become more reliable at 10,000 votes.1 More votes do reduce noise, but unless they correlate with some intersubjective aesthetic quality, they will send a title regressing toward the mean. A general correlation between ratings and vote counts is computed above. Let’s take a closer look at titles above German’s particular limit.

| N | Mean | Percentile | CV | |||||

|---|---|---|---|---|---|---|---|---|

| 10 | 25 | 50 | 75 | 90 | ||||

| IMDb | 12027 | 6.90 | 5.5 | 6.2 | 7.0 | 7.6 | 8.2 | 16.6% |

| RT | 8478 | 62.25 | 20 | 40 | 68 | 86 | 94 | 44.2% |

| Metacritic | 6837 | 57.93 | 34 | 45 | 58 | 71 | 82 | 31.6% |

There is a slight drop in median IMDb ratings here, but the other sites show higher ratings than the full data set. This is probably due to an unmeasured correlation with fame.

Conclusion

I refrain, in this article, from speculating on general reasons why average ratings on all three sites are above the midpoint of each respective scale. However, I will mention one reason why IMDb’s median is even higher, relative to its scale, than RT’s or Metacritic’s. In an earlier analysis of ratings on Filmtipset, I found that individuals’ ratings correlate negatively with their level of experience. Those critics on Filmtipset who had rated 1000 films dipped below the midpoint of the scale. It makes sense that professional critics, on RT and Metacritic, fall closer to the midpoint than does the broader population on IMDb. Professionals usually have more experience.

It is more obvious why ratings are not linearly distributed. The main reason is that, as samples accumulate on a potentially linear scale, a mean of those samples will creep into a standard distribution. That distribution is more pronounced on IMDb because the number of voters there is larger.

I argue that, for the purpose of finding good movies to watch, the combination of the two major trends I just mentioned is a drawback. Together, skewed averages and non-linear distributions make it hard to tell at a glance how people who’ve seen a given work would compare it to others. For example, a 6.8/10 on IMDb might look promising, but it’s below the median. It means in essence that most people who rated it liked it less than movies and series in general.

Rotten Tomatoes is already good enough for quickly screening available movies, because it is the least skewed and most variable of the three sites under review. Through the non-magic of pandas, I’ve produced a translation table for IMDb, by staking out the deciles as follows.

| IMDb rating | Linear rating |

|---|---|

| 1.0–5.0 | 1 |

| 5.1–5.8 | 2 |

| 5.9–6.3 | 3 |

| 6.4–6.7 | 4 |

| 6.8–7.0 | 5 |

| 7.1–7.3 | 6 |

| 7.4–7.6 | 7 |

| 7.7–8.0 | 8 |

| 8.1–8.4 | 9 |

| 8.5–10.0 | 10 |

The bottom 5 points and the top 1.5 points each cover 10% of site content. In between, the segments between deciles get as narrow as 0.3 points on the ratings scale, around the midmost work, which is rated highly at 7.1.