The forces of ratings inflation

Why critics and aggregators skew high

In this article, I speculate about why critics and audiences generally rate quality highly, whether it’s the quality of movies, consumer goods, businesses or human beings. A “high” rating here is any rating above the midpoint of any scale.

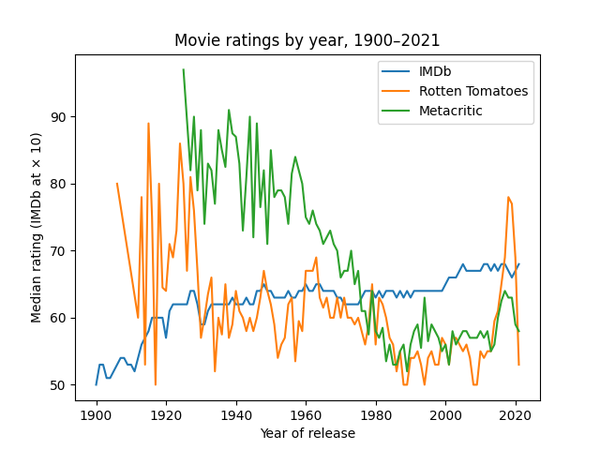

I write reviews as a hobby. In a 2021 statistical analysis, I found that average movies are rated highly on IMDb, and to a lesser extent on Rotten Tomatoes and Metacritic, three sites where I am not a contributor.

Median ratings by year are consistently at or above the midpoint on all three sites since about 1912.

As a more extreme example, 51% of all ratings on Yelp are 5 out of 5 stars.1 The tendency to go high is nearly universal on consumer-facing sites where things are rated for quality. For example, it looks like BoardGameGeek (BGG) skews high like IMDb. Both sites collect ratings from an open pool of users, with little verification.

Contents

Hypothetical reasons

Off the top of my head and in no particular order, I think ratings skew high for the following reasons.

WYSIATI bias

Hypothetically, a user who skews high may just be trying to match what they see as normal, based on other people’s ratings. In this trivial model of the user’s psychology, the quality of the user’s experience is less relevant than the fact that high ratings are the most common.

This hypothesis, which can be called “What You See is All There Is” (WYSIATI) after Daniel Kahneman, is weak. It cannot explain why users started skewing high in the first place, and not why the phenomenon is so widespread in mutually independent venues. However, it is plausible that skewed ratings have come to constitute an implicit norm. The more cemented this norm gets, the harder it is to break.

Guidelines

When a user hovers over a potential rating for a board game on BGG, they see the following guidelines for interpretation of the site’s 10-point scale:

- Awful - defies game description.

- Very bad - won’t play ever again.

- Bad - likely won’t play this again.

- Not so good - but could play again.

- Mediocre - take it or leave it.

- Ok - will play if in the mood.

- Good - usually willing to play.

- Very good - enjoy playing and would suggest it.

- Excellent - very much enjoy playing.

- Outstanding - will always enjoy playing.

These are typical. IMDb has no official guidelines, but IMDb users describing their own personal interpretations of the scale tend to do it in similar terms.2

Notice the two adjectives around the midpoint of the BGG scale. The word “mediocre” comes from the Latin mediocris, meaning middle height, elevation or quality, originally from ocris, a rugged hill or mountain that would be hard to climb even half way. The English word denotes “average”, but it has a negative connotation. The next keyword, “OK”, probably originated as a humorously bad abbreviation of “all correct”, originally denoting something that is good enough in every meaningful way, but “OK” has pejorated like “mediocre”. In modern English, it suggests reluctance, and on the BGG scale, “OK” is explicitly worse than good. “Good” appears past the midpoint of the scale, and “enjoy” even further along, at 8/10. The words “adequate”, “satisfactory” and “average” are not used. There is no neutral place on the scale. That’s common and probably deliberate.

If you rate a game at 5 according to these guidelines, you do not thereby indicate that it is typical or normal among the games you play, or have ever played. You instead indicate that it is significantly worse than good and that you did not enjoy it. Such guidelines, viewed in isolation, suggest two things. Either the author expects ratings to skew high, as in fact they do, or the author believes that normal experiences are not good, which is dubious. The latter possibility, in turn, splits two ways: Either the author is irrationally pessimistic, or they have espoused some philosophy that distinguishes between two different ranges of quality, such as entertainment distinct from a “true” art that brings a “true” joy. No such philosophy is apparent in the way people actually rate games or movies.

Not all sites where ratings skew high have official guidelines. Though interesting, guidelines don’t seem to influence ratings. As with the hypothetical WYSIATI bias, I expect a small effect size from guidelines, even in contexts like BGG’s user interface, where the guidelines are readily available.

Reinterpretation

Metacritic’s stated goal is to “help consumers make an informed decision about how to spend their time and money”, a goal that is not served by skewing in either direction, nor by rating on a curve. Nonetheless, Metacritic processes ratings by the undocumented “stature” of critics to produce a weighted “Metascore”. They also make arbitrary editorial choices in the interpretation of individual critics’ scales, which reduces diversity of opinion compared to Rotten Tomatoes. Finally, Metacritic moves the window of “general meaning” based on the medium of a work.3

It is not a stated editorial policy at Metacritic to skew high. However, there is money at stake,4 and historical movie ratings on Metacritic are higher than on Rotten Tomatoes. It is not clear whether conscious ratings inflation on either site would be sustainable as a business practice, but as long as neither editorial policy nor raw data are public, Metacritic could get away with systematic inflation if its staff or owners wanted to. Lots of reviewers do get away with this.5

Given that Amazon owns IMDb, Prime Video, and the Amazon Original brand of streaming video, there is a monetary incentive toward corruption in the case of IMDb. Hypothetically, the aggregator would pick its secret policy of reinterpretation in whatever way maximizes company profit while minimizing the risk of getting caught without a plausible excuse. This is, of course, easier on Amazon Marketplace, where ratings (“Amazon Seller Rating”) are extensively weighted by policy, and skew high. Amazon itself is rated highly by Amazon, on Amazon.

Integrity

Many sellers on Amazon pay their own costumers for five-star reviews. There is profit in that because Amazon skews search results based on reviews. It is even easier to skew ratings by registering fake users on sites like IMDb, because IMDb does not track what movies you’ve seen. You can easily find companies providing fake ratings as a service. They are hired to cheat in marketing campaigns, but not all such cheating is commercial. Sites like IMDb and BGG, where reviewers are poorly authenticated, use secret algorithms in part to counteract non-commercial campaigns of dishonest voting. One example is Ghostbusters (2016), which is marked by IMDb with the following note:

Our rating mechanism has detected unusual voting activity on this title. To preserve the reliability of our rating system, an alternate weighting calculation has been applied.

The vote breakdown page for that specific movie shows a conspicuous plurality of bottom ratings and an unusually large proportion of top ratings, on either side of what is otherwise a standard distribution centered on 6. IMDb is correct in identifying that voting activity as unusual. It’s a form of disinformation.

Trying to compensate for dishonest voting on a statistical level is a cheap way to maintain integrity. It means that IMDb does not have to take expensive or commercially risky actions such as singling out trolls, shills and other abusers. A minor downside is that the secret algorithm can skew ratings. The arithmetic mean of all votes on the Ghostbusters remake is somewhere below 6, whereas the official IMDb rating as of 2021-11 is 6.5, higher than the mean of all votes and higher than the mean of the subset of votes that follow the standard distribution. In this specific case, the secret algorithm to fight abuse skews high. In a more normal case, where shills add high ratings and the special algorithm isn’t triggered, the effect is the same: Ratings skew high.

Exuberance

I have presented the possibility that editorial policy could skew ratings. On its face, this possibility is neutral. If, hypothetically, IMDb really worked to drive business to Amazon Original content through ratings—which I do not believe is the case—they could do it by deflating other providers, just as well as by inflating their own. However, there are psychological factors that would drive a cheater to skew high.

In a 1996 speech, Alan Greenspan coined the term “irrational exuberance”, referring to a stock-market bubble at that time. The phrase is now one of many names for an underlying psychological tendency in investors. Briefly put, humans are optimistic. We are vulnerable to wishful thinking, especially in a social context. It feels good to be part of a group that likes the same things and is moving onward and upward. Unlike stocks, ratings are free, so ratings skew high.

Beyond trying to find good movies on IMDb, we like to find reviews that are funny, convivial, “life-affirming” and exuberant. The more things that seem to be well liked, the greater the number of successful “in-groups” you will belong to, and the more certain seems the promise of a good life. People who did not tend to think this way have mostly exited the gene pool. In general, even self-reported ratings of people’s quality of life skew high.

On the stock market, irrational exuberance can be self-sustaining. A stock can come to resemble a currency, the value of which is a function of collective faith rather than the underlying material (“real”) economy. There is a parallel in movie reviews. If a strong majority seems to love The Shawshank Redemption (1994), it takes effort to disregard the balance of public opinion.

Like WYSIATI, exuberance is conformistic. Exuberance is also counterproductive. Stock markets crash because of exuberance. Consumers are ill served by generous, optimistic critics who tend to rate high, because that makes it hard to compare two of their recommendations. Like any symbol, a rating can only be meaningful in relation to other symbols. If 100% of businesses on Yelp had 5 out of 5 stars, this would not make all businesses good. Instead it would put Yelp out of business.

Projecting exuberance

Political campaigns can “go negative” on opponents, but corporations promoting their goods rarely do this. Marketers are happy to undermine the self-confidence of consumers to manufacture a sense of need, but it is more efficient for them to be positive about what they’re selling than to be negative about each of their competitors. I guess that even people who buy the votes of fake user accounts on IMDb order high ratings for their own projects, rather than low ratings for box-office rivals.

The reasons are partly economic. IMDb advertises Prime Video because they are both owned by the same megacorp, but IMDb does not openly attack Prime Video’s competitors, such as Netflix. Openly disparaging Netflix is even more risky than attacking a political rival. It risks lawsuits and bad PR which would show up on the bottom line. This is partly because capitalists operate on the pretext that competition is good, but the biggest reasons are rooted in public perception.

Positivity is safe—even in advertising—because most cultures value people who are exuberant, passionate and agreeable. We associate such people with kindness and vivacity. To be generally enthusiastic in this particular way has been a signal of genetic fitness and tribal utility, because unfit individuals would not have energy to spare. To skew high in one’s individual rating on IMDb is a cheap way to try to send that signal.

There is a popular literary equivalent to IMDb called Goodreads. You are more likely to rate a book favourably on Goodreads if you got it from a friend, or that friend wrote it, or even if you just know your friends like it. If your friend wrote a book and you hated it, you are less likely to publish a review at all, because when ratings skew high, a low rating looks offensive. Skewing low, that is systematically rating low, makes you look bad. When you skew low, you project the opposite of exuberance. You will be perceived as unkind, gloomy or pedantic: A “hater”, “bummer” or “negative ninny” who is not on the team. To save your image, you must become a coward, voicing only compliments and omitting criticism. That transfers to higher ratings.

The tendency to project exuberance is mitigated among professional critics who can get the same amount of page views and professional respect for positive as for negative reviews. Working against irrational exuberance and keeping all ratings meaningful is still part of the metaphorical job description. That’s visible in the gap between critics’ and audiences’ scores on sites like Rotten Tomatoes. However, wherever such a gap is shown, there is a natural tendency to dismiss the less exuberant critics as joyless elitists.

Quality

Games have gotten better. The baseline of the industry has shifted, but old ratings have not. As a result, one analyst has speculated that BGG is “running out of room” on its scale.6 IMDb similarly displays near-constant growth in its ratings going back to the invention of cinema. That growth may come from an escalating exuberance, aided by ratings being determined nearer the height of release hype since the launch of IMDb, but it can also come from real improvements, including better technology and technique in production and presentation, as in board games and video games.

Theoretically, if IMDb scrapped all of its ratings and relaunched today, users would still rate recent works higher than old ones, but the median rating might sink by a couple of decimal points because viewers nowadays have higher expectations upon the average work than they did in 1993, when IMDb moved to the web.

The exhaustion of quality with experience

According to an earlier study I made of another ratings site, Filmtipset, more experienced critics rate movies lower. Ratings on Filmtipset skew high for those users who’ve rated less than 1000 movies, and then start skewing low. They go lower than the midpoint, which is lower than the median rating on IMDb in any era, and lower even than Rotten Tomatoes or Metacritic.

This could be because more experienced viewers gradually run out of good movies. This is probably true among the extreme users, but there must be a counterbalancing effect where only those with a love of the medium—who therefore tend toward higher ratings—make the effort to watch, evaluate and rate a thousand feature films. There must also be another effect whereby dopamine levels decline with familiarity (habituation/accommodation), regardless of quality. In any case, the inexperience of most amateurs is a plausible reason why ratings often skew high, but only on relatively open sites where inexperience is the norm.

Selection bias

Unlike IMDb, Rotten Tomatoes and Metacritic show generally higher ratings for older movies, with a lot of noise. This seems to contradict the hypothesis of rising quality, but doesn’t. Nostalgia and didacticism are probably factors in it, but most of the discrepancy is instead a result of selection bias. The professional critics whose opinions are the basis of RT and Metacritic rarely publish about older works, and when they do, it is generally the most lauded classics which are remastered, rereleased, recirculated at revivals, etc. Bad older works are rarely reviewed by professionals.

Selection bias is also big outside the circle of professional critics. IMDb, which skews higher than RT or Metacritic, lists a broader range of movies and series for review, but it’s only a tiny fraction of all the moving pictures ever produced. About 500 hours of video are uploaded to Youtube each minute. Much more is filmed and never uploaded anywhere. Among countless amateur productions, home video and CCTV, there is something for everyone, but if all of it was rated on IMDb by all those who saw it, the median rating would not skew high.

The same is true in games. Poorly designed and obscure board games do not appear on BGG at all and would be rated poorly if they did.7 When I rate a game on BGG, I still cannot avoid comparing it to all of my other memories of board games, including the ones that are so bad that nobody has bothered adding them to the database. There is a horizon of notability and effort underneath selection bias that hides a lot of chaff from view. It’s consumed, but it isn’t rated. Call it the data-entry bias.

In an even larger context, consumers actively seek pleasure. Goodreads, therefore, is not named “Allreads”. Most of us do what we expect to enjoy and we’re usually right in predicting our own pleasure. Watching random feature films listed on IMDb would, hypothetically, fill out the bell curve on IMDb and send the median rating closer to the midpoint of the scale. That’s one of the reasons why professional critics, not bound to a genre, were more critical in the 1980–2015 era: They had to watch a variety of films instead of what they expected to like. However, it is an open question as to what extent users on IMDb actually compare the works they rate to inferior works they know about but do not rate. It is another open question whether they try to compensate for the former effect.

Loyalty

Selection bias skews ratings higher through one more effect. As shown here, on IMDb, series are rated higher than movies, and episodes of series are rated even higher than complete series. It is not likely that individual episodes are systematically better on average than the shows they constitute. It is also not likely that movies, produced at greater cost and care, are generally less enjoyable than television to audiences on IMDb, yet that is how they’re rated.

One of the reasons why episodes skew especially high is that, like viral videos, they are shorter, less complex than movies or series. They require a smaller investment per episode and their sheer simplicity provides cognitive ease, which is pleasurable. This is especially true for television up through the mid-1990s, which was usually episodic, but it is less true for modern television, which requires investment to keep up with serialized narratives.

I believe the larger effect comes from loyalty. Those IMDb users who bother to rate individual episodes are generally fans of the specific show, while a broader range of users rate the show as a whole. Only the better shows attract loyal per-episode reviewers. Many shows are designed to generate that loyalty, making viewers get to know and care about the characters over the long term, something that is harder to do in movies.

There is a similar effect among critics at large. Since about 2015, Rotten Tomatoes has increasingly featured critics who rate only their favourite genres for narrow audiences watching and loving the same genres. There are also more critics who, in a larger sense, see themselves as loyal members of a threatened film industry, and face direct threats from vocal fans unless they skew high.8

Commitment bias

Another reason why series would be rated highly is precisely that they require a larger investment of time. Once having made that investment, viewers look back and ask themselves “Did I just waste 100 hours of my life?” To answer that question in the affirmative is painful. The greater the investment, the greater the tendency to double down and assert that it was worth it. This may be why Metacritic has different definitions of “Generally Favorable” for different media: Video games, which are longer than movies, require more commitment and skew higher.

Conversely, viewers who do not make the complete investment may decide not to give the series or game any rating, on the grounds of having incomplete information. I watch at least a full season of a series before I publish a review of it, and it is the same with novels. Anything I dislike too much to finish is not reviewed at all. This skews my own ratings higher, especially for longer works.

Bias toward attitude strength

Psychologists have found that strongly expressed attitudes are more resistant to change (e.g. persuasion), more persistent over time, and more consistent with actual human behaviour.9 For example, an answer of 10 on a 10-point scale (“Agree completely”), in a survey about virtually anything, turns out to be more useful information than an answer of 8.

This interpretation of strong attitudes ties into exuberance. At the broadest level, there are social contexts where any weak attitude is seen as phoney or a sign of more general personal weakness. If your weekend was “great” or you have some funny story about how it was “terrible”, you are OK. If your weekend was “OK”, you are not OK. Having neutral experiences marks you as mediocre, in the warped sense of being both normal and bad at the same time.

If this tendency were not moderated by the others, it would produce polarized ratings, with very little at the midpoint and a lot more movies at both extremes. In actuality, most individual movies on IMDb show a mostly-standard unimodal distribution of user ratings in their vote breakdowns, albeit off-centre. A conspicuous bump at the top and bottom are common in the breakdowns, but 80% of aggregate IMDb ratings lie between 5.0 and 8.5, not at the extremes.

Dubious neutral ground

I have no need for a “Neutral” button on Youtube, between “Like” and “Dislike”. Not many would make the effort to click that button. The “passionately neutral” opinion is a recurring trope of comedy because it sounds like an oxymoron, built on the premise that the strength and valence of an attitude can be disconnected. They can. It is possible to be passionately neutral, but such an opinion cannot be fully expressed in a one-dimensional model like the ten-point scale of IMDb.

We do usually find both strengths and weaknesses in a product, but we tend to pick one side or the other instead of letting them cancel out. When exposed to a “clean” input, such as Kazimir Malevich’s blank painting White on White (1918) or John Cage’s musical composition 4’33” (1952), few people seem to arrive at a neutral opinion. Instead, we end up on the noise floor of our own thoughts and make up something off-centre.

As a result, a middling rating is ambiguous in a way that off-centre ratings are not. The middling rating may express a strong attitude about a neutral or balanced or normal input, but it may also express an attitude that is half-formed and weak, which says little about the input. This ambiguity is unpleasant and can be avoided by skewing in either direction.

Going to extremes

A bias toward attitude strength does not explain why ratings skew especially high in a general person-to-person context, but it may have something to do with peer-to-peer e-commerce (eBay, Tradera etc.), where there is a community norm of acceptable behaviour. Here, users rate high in the absence of norm violations. Precisely because high ratings are very common in e-commerce, anything lower stands out as showing a strong attitude. This enables a low rating to function as a warning of fraud, which is useful. Such a semiotic function is barely applicable to sites like IMDb, which skew less. On IMDb, the norm is to rate high in the presence of entertainment, not yet by default.

The bias toward attitude strength can be oppressive because its actual psychological basis is often misinterpreted and mixed up with prejudice. When you are asked to rate customer service in a survey, for instance at a retail store, there is a strong possibility that the employees who served you will be penalized if you use anything but the top of the scale,10 and penalized further if they tell you about the scale.

Aversion to negative feelings and risk

Psychologists have confirmed other relevant biases. For example, there’s the “acquiescence bias”, a statistical tendency to say yes before considering the merits of a yes-or-no question. There’s also “loss aversion”, where losing an amount of money has twice the psychological weight of gaining the same amount. Hypothetically, loss aversion is a manifestation of a general human tendency to focus on and magnify bad news, despite our tendency toward irrational exuberance in the absence of bad news. We are badly tuned in a way that makes us look for and focus on any indication of negativity, and instead of working aginst this bias, we skew high to skirt it.

On peer-oriented Youtube, “likes” are far more common than “dislikes”. Since 2021, the number of dislikes is hidden, perhaps to spare the feelings of loss-aversive contributors, but more likely to protect advertisers. Blockbuster movies are also made by people hoping for a kind reception, but it would be a disservice to readers for a critic to pick a rating to protect creators’ feelings when moviegoers outnumber creators.

Lastly, just because skewing high is the norm, it has become a way to hide. For example, if your employer throws a company picknick for 10 people, and then sends out an anonymous survey on how well everybody liked it, a high rating is the safe option. That is partly because you won’t stand out in your manager’s analysis, so they won’t bother identifying you from the rest of your responses. You’ll just be part of the crowd.

The feedback loop

When you cannot hide, you have to skew even higher to be inoffensive. On the Internet, a personal connection that forces you to stand out from the crowd does not require friendship or even familiarity. On marketplaces like eBay and Airbnb, where individual buyers and sellers rate each other directly, the norm of skewing high is especially strong.

Compare the various national and international systems for rating hotels. These systems, being older than the Internet, are not based on customer reviews. They are based on extra features and services. Most systems go from 1 star (or diamond) up to 5, like the Airbnb scale. Every hotel rating from 1 to 5 provides the prospective customer with useful information. Appropriately, the average hotel has 3 stars, which is the middle of the scale. Lots of travellers rationally choose 1-star hotels, depending on which features they require.

Airbnb does not use a system of hotel ratings. It disables listings that fall below a certain rating, based entirely on customer reviews. I can’t find a clear policy on this, but there’s anecdotal evidence that you are delisted as a host if you’re rated at 4.3 on average. 4.3 is 1.3 points above the middle of Airbnb’s scale, which goes from 1 to 5 like hotel ratings, spanning only 4 points.

Airbnb has clearly observed a tendency to skew high. I don’t know whether its staff approve of that tendency, but they have implemented a policy to compensate for it. Under that policy, when you’re looking at the average rating of a host on Airbnb, there is no meaningful difference between any rating from 1.0 up to 4.3. When all such ratings receive the same interpretation from Airbnb—that they are too bad to be listed—80% of the scale goes unused and is therefore wasted. That policy causes hosts to compensate in turn, just like those sellers on Amazon who pay their customers for 5-star reviews, citing the fact that Amazon will hide sellers who are not so highly rated. Similarly, restaurants and delivery workers may request a high rating to avoid being penalized by food-delivery aggregators like Grubhub. Whether they would be penalized or not, the suspicion is enough to drive ratings higher, out of genuine sympathy for the workers as a weaker party under the aggregator.

Artificial selection on the marketplace drives sellers to request very high ratings of their customers, stating the risk of being delisted or concealed in search results. This feedback loop is obviously harmful to sellers, who are no longer truthfully rated, but it is also harmful to buyers. In such a loop, ratings become a waste of space. When you see a high rating, you won’t know how much of it was paid for.

Conclusion

Because this is speculation and a couple of anecdotes, I have no definite answers to the question of why ratings get inflated. However, I would like to say that none of the reasons I can think of should convince a critic to skew high. My conclusion is the opposite.

As a reviewer, you can make up your own guidelines and your own scale. If you submit your work to Rotten Tomatoes you interpret it for them. Otherwise, do not worry about the reinterpretation of your opinion by an aggregator like Metacritic or even a bad actor like Airbnb or Amazon. It is more important that your readers can find out for themselves what you think. If you’re honest, bias of every kind is something you want to avoid, both as a critic and as a reader of criticism.

A shifting baseline of quality is a harder problem for the individual critic. I review books and moving pictures, the contents of which get better very slowly. However, in my lifetime, TVs have gone from CRT to µLED technology, improving faster than media content shown on them. If you’re just starting out writing reviews, and especially if you’re doing it in a fast-moving field, my advice is to skew low.

-

Example: Brendan Rettinger, “Cinemath: Do I Agree With IMDB Users? A Statistical Analysis of My IMDB Ratings” (2011-11-27), Collider. Online here. Rettings glosses over ratings 2–4 as “I did not like this movie” without further distinction. ↩

-

Metacritic labels a movie as having generally favourable reviews with a Metascore of 61–80, but in the case of games, the same wording (“Generally Favorable”) is reserved for a different range of 75–89, with only 5 points of overlap between the two media. Source: “How We Create the Metascore Magic”, Metacritic, read 2021-11-21. Online here. ↩

-

The general commercial importance of a rating is not clearly established. In one famous example where it did matter to creators, game publisher Bethesda denied development studio Obsidian a bonus for Fallout: New Vegas (2010) because the game received a rating of 84 on Metacritic, lower than the target number of 85. ↩

-

For example, at the time of writing, of the last 30 reviews on whathifi.com, one is at the midpoint of the five-star scale. The other 29 are all at 4 or 5 stars, with nothing below the midpoint. What Hi-Fi? would not be receiving so many free products to review if it were not so business-friendly, nor would it be selling so many ads in its magazine. It, and many operators like it, drive business by providing something in the grey area between producer-focused publicity (promotion), and unbiased consumer-focused criticism. ↩

-

Oliver Roeder, “Players Have Crowned A New Best Board Game — And It May Be Tough To Topple” (2018-04-20), FiveThirtyEight. Online here. ↩

-

Example: Bamse och Dunderklockan (2018), a tie-in to Bamse and the Thunderbell (2018). As of 2021, this game is not on BGG. ↩

-

Max Hartshorn, “Movies Are Scoring Higher and Higher on Rotten Tomatoes — But Why?” (2021-06-16), Global News. Online here. ↩

-

Richard E. Petty, Curtis P. Haugtvedt and Stephen M. Smith, “Elaboration as a determinant of attitude strength: Creating attitudes that are persistent, resistant, and predictive of behavior”, a chapter of Attitude strength: Antecedents and consequences (1995). ↩

-

Example: Official documentation of the Net Promoter Score (NPS) describes an 11-point scale from 0 to 10. Anyone who rates their customer experience at the midpoint of this scale is characterized as a “detractor” of the business (netpromoter.com, 2021-11-21). This offshoot of Taylorism is commonly implemented in such a way that workers are arbitrarily punished (reprimanded, demoted, paid less or fired) for the existence of neutral customers. ↩