More experienced critics rate movies lower

Statistical patterns on Filmtipset

This article uses data from Filmtipset to show a tendency in the critical discernment of cineasts.

Filmtipset is a Swedish “web 2.0” forum founded in the year 2000. On it, registered users rate (grade) movies, mainly feature films, on a scale of 1–5. Based on these ratings, the site offers automated suggestions about what to watch next. The recommendation algorithm creates an incentive for honesty. There is no instruction as to how the available ratings should be applied.

I use the same ratings on Filmtipset as I do on this site. New readers of my reviews often perceive me to be stingy with my ratings, as though I am bitter or even dishonest in begrudging their favourites a fair appraisal. My ratings are indeed lower than average on Filmtipset. In this article, I am not going to go into why this is. The following statistical analysis simply proves the fact itself, and the existence of a general pattern.

Contents

About the data set

The data used in this article was collected in the summer of 2021, with a web crawler I wrote for the purpose, based on pyquery. I did not crawl the entire site. Instead, I first collected the first 10000 shortest lower-case alphabetic user names. I then inspected the personal connections (“friends”) of each of those users, adding them recursively to the data set.

When all of the interconnected graphs of personal connections were exhausted, I had the opinions of 48828 unique reviewers. Of these, I discarded 2142 reviewers who had registered fewer than 10 ratings, judging these to be too noisy. Of the remainder, I discarded a further 40 because their mean rating was either lower than 1.1 or higher than 4.9; I took this to indicate that they were using the site for something other than honest opinion. The following analysis thus covers 46646 reviewers, including myself.

Looking at each of these reviewers without regard to their levels of activity, the arithmetic mean of their mean ratings is 3.22. Looking instead at the total numbers of all ratings, the arithmetic mean weighted by reviewer activity is 2.98 with a variance of 1.07. Unsurprisingly, the median rating is 3 and the overall distribution is broadly Gaussian.

There is some corruption in this data set as evidenced below, and it is not a complete picture of the site. Although every user’s ratings are public, there is no public roster of all users.

Reviewer activity

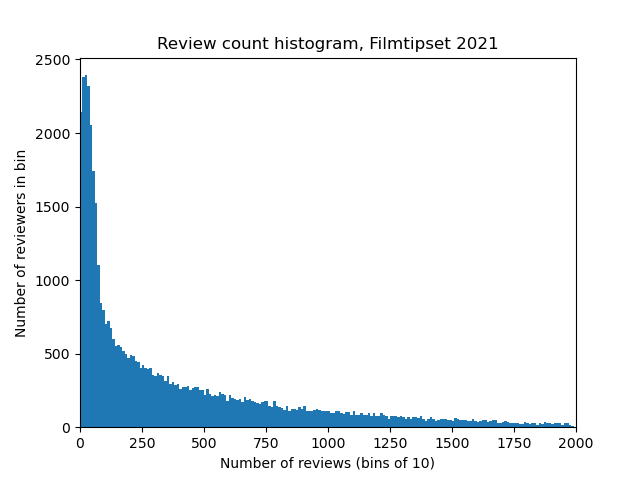

The most common amount of reviews per reviewer in the data set is 37.

More active reviewers are less common. Their numbers taper off from the peak at 37, as activity increases. Notice that “activity” here is strictly historical. The majority of reviewers who appear in the data set have long since stopped rating movies on the site. A very small number are duplicate accounts created in a period around 2018 when the site was unmaintained and functioning poorly, so that lost passwords could not be reset.

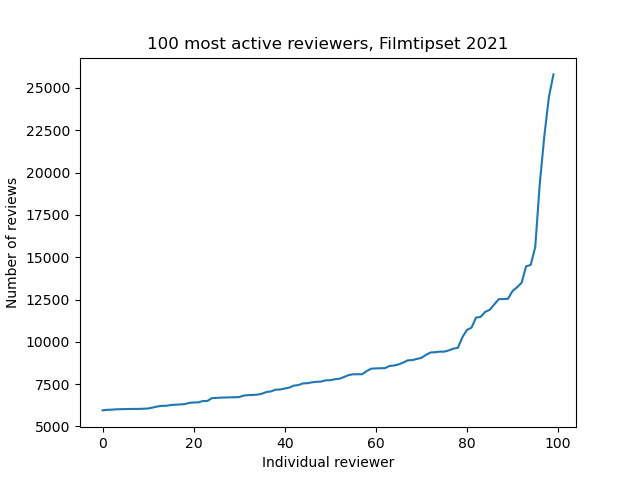

At the extreme far end of user activity, 21 reviewers have more than 10000 reviews, some reaching above 20000.

Correlating activity and ratings

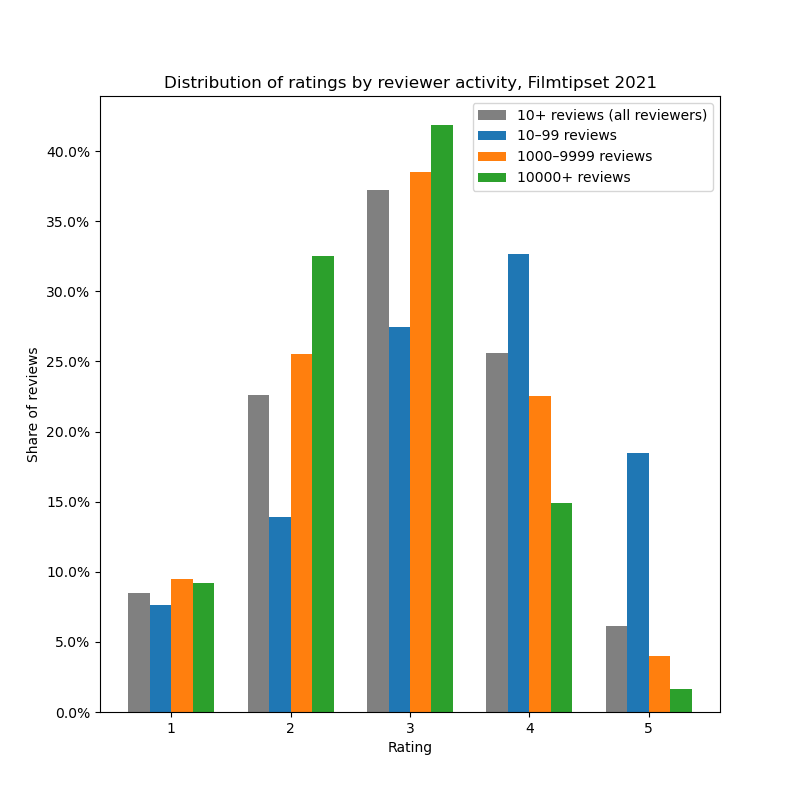

The following bar chart demonstrates why the activity-weighted mean rating is lower than the unweighted mean. Less active reviewers are more generous with high ratings.

There is no correlation for the rating of 1, which hovers around 8% in all groups. 2, however, is more than twice as common among the most experienced reviewers as among the least experienced. 3 is the most common rating in all groups except the least experienced, who use 4 more often. Finally, the least experienced reviewers use the rating of 5 many times more often than the most experienced, with usage dropping off from 18% to a low of 2%.

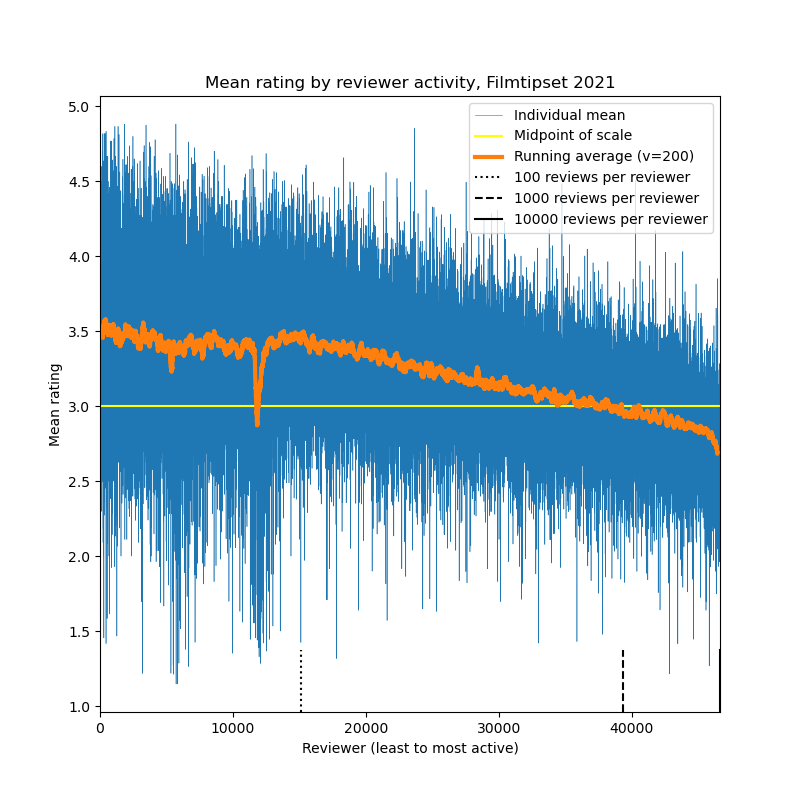

As shown in the bar chart, both the least experienced and the most experienced reviewers depart from the Gaussian distribution, but in opposite directions. The following more complex plot shows how gradual that development is.

Mean ratings are high at 3.4 and almost constant among the least active, from 10 to 100 reviews. There is a trough around 40 reviews, deep enough to affect the running average. This trough is probably an artifact of automated brigading. It inflates the number of low ratings (1s and 2s) by the least active group in the bar chart.

Starting at 100 reviews, the data is less noisy. There is an almost linear trend of ratings decreasing with experience. At roughly 1000 reviews, individual means intersect the midpoint of the ratings scale, at 3. Those with 1000–9999 reviews have a combined mean rating, weighted by activity, of 2.86. The drop continues past that point and gets noticeably steeper at the end, where the number of reviews also rises more sharply. The extreme right looks like the beak of a bird-eating bird. The 21 most active dip to a mean rating of 2.67, again weighted by activity.

Analysis

I refrain, in this article, from speculating on general reasons why more experienced critics rate movies lower. However, I will note a specific feature of Filmtipset contributing to that effect.

When a new user registers on the site, they are asked to rate specific movies to get the algorithm going. This is known as the starter kit (startpaket). It has changed slowly over time. I signed on in 2007 and I believe I was offered Citizen Kane (1941), Casablanca (1942), Deliverance (1972), The Shining (1980), Ghostbusters (1984), When Harry Met Sally (1989), The Silence of the Lambs (1991), Forrest Gump (1994), The Usual Suspects (1995) and Fargo (1996). All of those are critical darlings or popular classics, and most of them are both. For the benefit of the algorithm I was also offered more polarizing material, like Superman: The Movie (1978), Crocodile Dundee (1986), Black Rain (1989), The Hunt for Red October (1990), Basic Instinct (1992), Four Weddings and a Funeral (1994), Interview with the Vampire: The Vampire Chronicles (1994), Scream (1996), Trainspotting (1996) and As Good as It Gets (1997), as well as various Swedish films, including My Life as a Dog (1985).

Some of the users in my data set, those with 10 reviews or little more, apparently rated the starter kit at 3.5 on average and never looked further. This is partly because the films in the kit are supposed to be good. The kit is described on the site as “the best known and most standard-setting sets of films in each genre” (mest kända och stilsättande uppsättningarna i respektive genre). Two of the films in it are ones that even I rate at 5: A Clockwork Orange (1971) and Twelve Monkeys (1995).

The starter kit is based in part on what a new user is most likely to have seen and to remember. This correlates positively with higher ratings. Having thus “used up” some of the classics, the higher ratings are not sustained for those who look further. Most users moving beyond the starter kit thus set gradually lower ratings. That tendency cannot be useful to the algorithm, but offering only the most polarizing and mutually dissimilar films to new users may be less palatable. Other reasons why more active reviewers rate movies lower are not specific to Filmtipset.

Conclusion in the case of the author

As of 2021-08, I have 4670 ratings on Filmtipset, putting me in the 99th percentile of user activity. My mean rating is 2.15. On my own website (this one), where I review a broader range of media, it’s even lower at 2.13.

There are 3225 reviewers in my data set with a mean rating of 2.15 ± 0.5. That’s 7% of the user base. I am indeed an outlier when ratings are not standardized or interpreted. This is partly attributable to experience, but my ratings are low even compared to those with more experience.